Transparency and Intelligibility of AI Systems

For uses of machine learning in practice, it is vital that such applications should be intelligible and explainable

Explainable AI is a key topic of current AI research – the “Third wave of AI,” following on from “Describing” (First wave: knowledge-based systems) and “Statistical learning” (Second wave). It is becoming increasingly obvious that purely data-driven machine learning is unsuitable in many areas of application, or not unless it is combined with further methods.

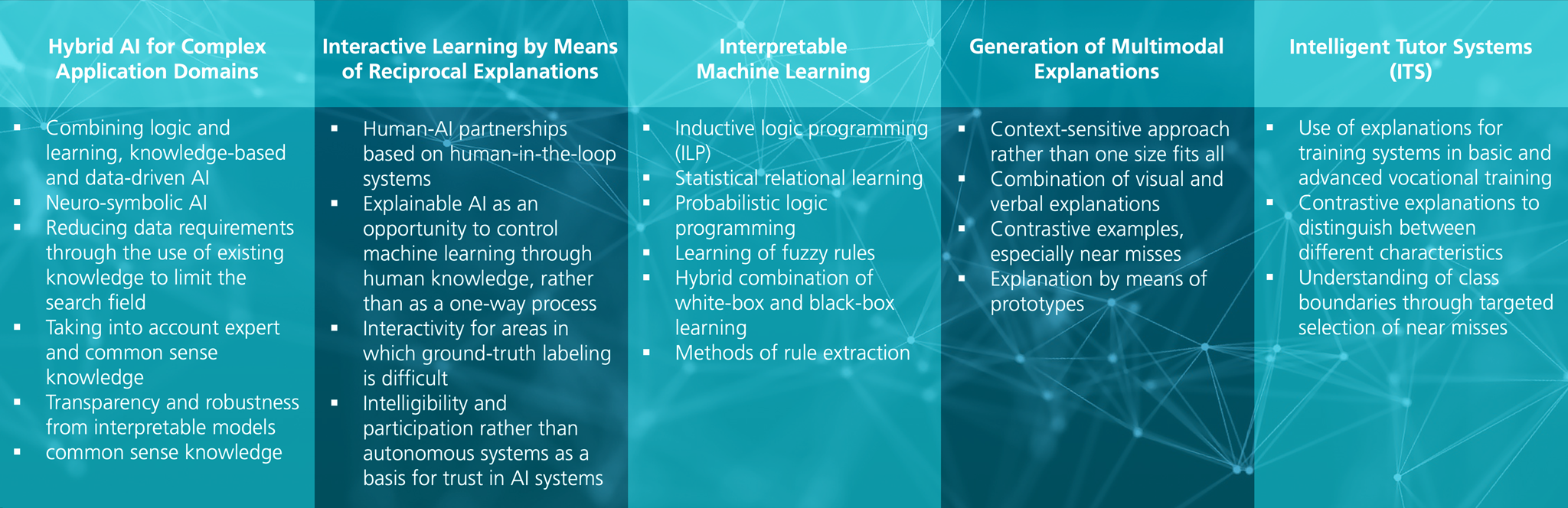

In collaboration with the University of Bamberg, Fraunhofer IIS has set up an “Comprehensible Artificial Intelligence” project group. Its purpose is to develop explainable machine learning methods:

- We are working on hybrid approaches to machine learning that combine black-box methods, such as (deep) neural networks, with methods applied in interpretable machine learning (white-box methods). Such methods enable a combination of logic and learning – and, in particular, a type of learning that integrates human knowledge.

- We are developing methods of interactive and incremental learning for areas of application in which there is very limited data available and the labeling of that data is problematic.

- We are developing algorithms to generate multimodal explanations, particularly for a combination of visual and verbal explanations. For this purpose, we draw on research from cognitive science.

Current areas of application:

- image-based medical diagnostics

- facial expression analysis

- quality control in Manufacturing 4.0

- avoiding biases in Machine Learning

- crop phenotyping

- automotive sector